A Guide to Legal Issues with AI Actors in Advertising

Navigate the legal issues with AI actors in advertising. Our guide covers copyright, publicity rights, and FTC rules to keep your campaigns compliant and safe.

Using AI actors in your ads opens up a world of creative possibilities, but you absolutely have to get your head around the major legal issues with AI actors in advertising before you dive in. The biggest minefields are copyright infringement tied to AI training data, accidentally violating a real person's right of publicity, and getting hit with penalties for deceptive practices under FTC rules and new state deepfake laws. Getting this right from the start is the only way to avoid a very expensive legal mess.

The New Legal Risks of AI Actors in Advertising

Stepping into AI-powered advertising is exciting, no doubt. But it’s like walking into a maze of legal tripwires you didn't even know existed. Think of it this way: you’re the director, but unseen legal frameworks like copyright, publicity rights, and consumer protection laws are the puppeteers. If you ignore them, your whole campaign could come crashing down.

This new terrain brings risks that old-school advertising simply never faced. Your brand could get sued for copyright infringement just because the AI model was trained on protected images without asking. It's also shockingly easy for an AI-generated face to look a bit too much like a real person, sparking a lawsuit for stealing their likeness.

Key Legal Challenges to Anticipate

The legal issues with AI actors aren't just hypotheticals anymore; they’re leading to real-world campaign takedowns, massive fines, and serious damage to a brand's reputation. Knowing exactly what you're up against is the first step toward creating an ad strategy that’s both innovative and legally sound.

Here’s a breakdown of what you need to watch out for:

- Copyright Infringement: Did the AI train on copyrighted photos or art? If so, your final ad could be legally considered a "derivative work," putting you on the hook for infringement.

- Right of Publicity Violations: If your AI actor even vaguely resembles a real person—their face, voice, or general vibe—you could face a lawsuit for using their likeness for commercial gain without permission.

- Deceptive Advertising Practices: The Federal Trade Commission (FTC) is crystal clear: ads must be truthful. Using AI actors for fake video testimonials or to imply an endorsement that never happened is a fast track to trouble.

- Navigating Deepfake Laws: More and more states are passing laws to clamp down on synthetic media. This creates a messy patchwork of regulations that changes depending on where your audience is.

To help you get a clearer picture, here’s a quick summary of the main legal hurdles you'll encounter.

Key Legal Risks with AI Actors at a Glance

| Legal Risk Area | What It Means for Your Ads | Potential Consequence |

|---|---|---|

| Copyright Infringement | The AI model used copyrighted images for training, making your ad a potential "derivative work." | Lawsuits, takedown notices, and financial damages. |

| Right of Publicity | Your AI actor accidentally resembles a real person's face, voice, or distinct persona. | Legal action from the individual for unauthorized commercial use of their likeness. |

| FTC & Deceptive Ads | Using AI to create fake testimonials, misleading product demos, or false endorsements. | Hefty FTC fines, legal sanctions, and severe brand reputation damage. |

| Deepfake & Privacy Laws | Violating new state-specific laws that regulate the creation and use of synthetic media. | Civil or criminal penalties, depending on the state and the nature of the violation. |

Understanding and navigating these risks is not just about avoiding penalties; it's about building trust in an era where consumers are increasingly skeptical of what they see online.

By proactively addressing these risks, you transform legal hurdles into a competitive advantage. A compliant approach not only protects your business but also builds trust with an audience that is increasingly wary of synthetic content.

Ultimately, the goal is to tap into the incredible power of AI without stumbling into obvious legal traps. It’s an ecosystem that also includes the companies building these powerful tools. If you want to see an example of who’s in this space, you can check out the copycat247 homepage. By staying informed and creating a clear compliance plan, you can innovate with confidence.

The Copyright Minefield Hiding in AI Training Data

When it comes to AI in advertising, the single biggest legal headache is copyright infringement. The whole problem starts with how these models actually learn. Generative AI doesn't just invent things out of thin air; it’s trained on absolutely massive datasets, often containing millions of images, videos, and texts scraped right off the internet. That's where the trouble begins.

Imagine an AI model as a musician who listens to every song ever made to figure out how to compose. If that musician then spits out a new tune that sounds suspiciously like a famous, copyrighted melody, they've crossed a line. The same exact principle applies here. Your ad could be built on a foundation of unlicensed material without you ever knowing.

This hidden liability means that even if you have the best intentions, your brand could be on the hook for copyright infringement. The AI-generated actor or scene in your ad might technically be a "derivative work"—a new creation that’s just a little too close to an existing copyrighted piece. And you’d have no idea what it was trained on to produce it.

The Murky Waters of "Substantial Similarity"

In court, the test for copyright infringement often boils down to a concept called "substantial similarity." This isn't about proving an AI made a pixel-for-pixel copy. It's much fuzzier. The real question is whether a regular person would look at the AI’s output and recognize that it was copied from a protected work.

For an advertiser, that ambiguity is a huge risk. If an AI generates a character for your campaign that has the same quirky, recognizable style as a famous cartoon character, you could be in for a lawsuit. The original artist doesn't have to prove the AI replicated their work exactly, just that the "total concept and feel" are the same.

And the legal battles are already ramping up. By late 2025, a wave of litigation brought around 47 copyright lawsuits against AI companies in the US alone, many as class-action cases. In high-profile examples, companies like Disney and Universal Studios have alleged that AI tools were trained on their blockbuster films without permission, creating a serious chain of risk for anyone using the visuals those tools generate.

Why You Can't Count on the "Fair Use" Defense

AI companies often try to defend themselves by claiming their training process is "fair use," a legal doctrine allowing limited use of copyrighted material for things like research or commentary. But that argument gets incredibly flimsy the moment the AI's output is used for a commercial purpose—like an ad.

Courts look at four key factors to decide if something is fair use:

- Purpose of the Use: Is it for profit or for education? Advertising is purely commercial, which is a big strike against a fair use claim.

- Nature of the Original Work: Using creative works like photos and illustrations is much harder to defend than using factual data.

- Amount of the Work Used: Did the AI model copy the "heart" of the original piece, even if it didn't use the whole thing?

- Effect on the Market: Does the AI-generated image hurt the original creator's ability to sell or license their own work? If your AI image replaces the need to buy a stock photo, that's clear market harm.

For marketers, leaning on a fair use defense is a gamble you're almost guaranteed to lose. The commercial nature of an ad campaign pretty much sinks the argument from the start. To get a better handle on protecting your own creative assets, looking into broader intellectual property considerations is a smart move.

The core issue isn't just what the AI creates, but what it learned from. If the training data is legally questionable, every piece of content it generates carries that inherited risk directly into your advertising campaigns.

Ultimately, the burden falls on you—the advertiser—to ask hard questions about where your AI assets are coming from. Choosing AI platforms that use licensed or ethically sourced training data isn't just about doing the right thing; it's a critical legal shield for your brand.

Protecting Human Identity in the AI Era

Moving beyond copyright, the legal issues with AI actors in advertising get personal. Fast. This is where we run into the right of publicity—the fundamental right every person has to control how their name, image, and likeness are used for commercial gain.

Think of it as a personal trademark on your identity. If you use AI to spin up a "digital twin" of a celebrity—or even just an everyday person—for an ad without getting their explicit permission, you're crossing a serious legal line. It’s essentially identity theft for profit, and the courts are not taking it lightly.

This isn't just about creating a perfect, photo-realistic copy, either. The law often protects any characteristic that points directly to a specific person. That could be their voice, a famous pose, or even a catchphrase they’re known for. Creating a virtual influencer that feels a little too familiar to a real-world celebrity is a lawsuit waiting to happen.

The Growing Threat of Unauthorized Digital Replicas

The explosion of deepfake technology has forced this issue into the spotlight, and lawmakers are scrambling to catch up. States are now passing laws specifically designed to fight the unauthorized creation and use of digital replicas. For marketers and creators, this means the old ways of getting consent are officially dead.

You can no longer rely on a standard model release form and assume it covers the creation of an AI version of that person. The legal ground has shifted, and the new standard is explicit, informed consent that specifically mentions AI generation. Anything less leaves your brand wide open to risk.

A key court ruling really drove this point home. It showed that even when federal copyright or trademark laws don't quite fit a case of voice cloning, state-level civil rights and publicity laws can still provide powerful protection for individuals whose identities are hijacked by AI. That puts the burden squarely on advertisers to know and respect these state laws.

Crafting an AI-Proof Consent Form

When you're getting permission to build an AI actor based on a real person, your approach needs to be completely different. The consent and release forms have to be airtight and leave absolutely no room for interpretation. A truly solid AI release should give you specific, unambiguous rights.

To stay on the right side of the law, your consent process needs to cover these key points:

- Explicit Right to Create a Digital Replica: The form must state, in no uncertain terms, that you intend to create a digital version of the person using AI.

- Scope of Use Defined: Be precise. Spell out exactly how and where the AI replica will be used—which campaigns, on what platforms, and for how long.

- Approval Rights on AI Output: This is a huge point of negotiation. Does the person get to review and sign off on the final AI-generated scenes before they're published?

- Future Use and Modifications: The contract has to clarify whether you have the right to alter the AI replica or use it in future campaigns that haven't been planned yet. Vague language here is a massive liability.

To see how the requirements have changed, just look at how traditional consent compares to what's needed for AI.

Real Person vs AI Actor Consent Checklist

This table highlights the critical differences between a standard release form and one designed for creating an AI replica.

| Consent Factor | Required for Real Person | Critical for AI Replica |

|---|---|---|

| Use of Likeness | Standard model release for photos/videos. | Explicit permission to generate a digital clone. |

| Duration of Use | Clearly defined time (e.g., one year). | Must specify if rights are perpetual or time-limited. |

| Modification Rights | Limited to standard editing (color correction, etc.). | Must grant rights to alter the AI's performance/dialogue. |

| Scope of Media | Specifies platforms (e.g., social media, TV). | Broadly covers all current and future digital media. |

Ultimately, navigating the right of publicity in the age of AI comes down to transparency and respect. Getting crystal-clear consent isn't just about checking a legal box—it's about protecting your brand and honoring the rights of the people whose identities are fueling your creative work.

Staying on the Right Side of the FTC

Beyond the maze of copyright and publicity rights, another major player enters the ring when you use AI actors: the Federal Trade Commission (FTC). The FTC’s job is simple: protect consumers from ads that lie or mislead. They don't care if your ad was created by a team of artists or a sophisticated algorithm—if it's deceptive, it's a problem.

At its core, the FTC's rule is straightforward. An ad can't be deceptive. This standard applies to everything the ad says and implies, including claims about the AI technology used to make it. If your AI actor claims a new protein powder helps build muscle 2x faster, you better have solid scientific evidence to back that up, just as you would if a human celebrity said it.

The agency is also coming down hard on what they call "AI-washing." This is when a company blows its AI capabilities way out of proportion. Touting your campaign as a groundbreaking AI achievement when it just performs a few basic automated tasks is a surefire way to get on the FTC's radar.

The Two Truths You Must Uphold

When AI actors get involved, you’re suddenly juggling two sets of responsibilities. You have to be honest about what you're selling and honest about the technology you're using to sell it.

Think of it as a two-part integrity check:

- Truth in Your Ad's Message: Are the claims, demonstrations, or testimonials presented by your AI actor actually true? An AI-generated video of a happy "customer" raving about your product is flat-out deceptive if that customer doesn't exist.

- Truth in Your Tech Claims: Are you exaggerating what your AI can do? If you claim your AI delivers perfectly personalized ads but it really just changes the background color based on location, you're misleading your audience.

The FTC isn't interested in the technical process behind the ad. They're focused on the message an average person walks away with.

Regulators Aren't Messing Around

This isn't just a slap on the wrist. The FTC is actively pursuing companies for deceptive AI marketing, sending a clear message that using "AI" as a buzzword isn't a free pass to make baseless claims.

The FTC has made it clear: if you make a claim about your AI, you need to be able to prove it. Advertisers must have robust evidence for every performance claim, just as they would for any other product feature.

And this isn't some far-off future scenario—it's happening right now. By mid-2025, the FTC had already launched at least a dozen enforcement actions against 'AI-washing.' A prime example was the August 2025 lawsuit against Air AI for falsely claiming its AI could completely replace human sales reps. This case underscores the serious legal and financial risks for marketers who overhype their AI tools without proof. You can learn more about the FTC's evolving stance on AI law.

When Do You Need to Disclose?

This brings us to a critical question: when do you have to tell people they're looking at an AI-generated actor? There isn't a single federal law with a hard-and-fast rule yet, but the FTC's general principles on deception give us a pretty clear guide.

The litmus test is this: "Would knowing this is AI change how a consumer understands the ad?"

If you use an AI actor to pose as a real doctor recommending a supplement or an actual customer sharing a personal story, failing to disclose that it's AI is almost certainly deceptive. The omission is misleading because it gives the endorsement a sense of credibility it doesn't actually have.

To stay safe and build trust, clear and prominent disclosures are becoming the new standard. A simple, visible tag like #AIGenerated can go a long way in keeping you ahead of regulations and on the right side of your customers.

Your Practical Compliance Checklist for AI Ads

Alright, let's move past the theory and get down to what you actually need to do. Tackling the legal minefield of AI in advertising is all about having a solid, repeatable process. This isn't about stifling creativity with red tape; it's about building guardrails so your team can innovate with confidence.

Think of this checklist as your pre-flight routine before launching any AI-powered campaign. By building these steps right into your workflow, you create a foundation of compliance that protects your brand, respects individual rights, and keeps you on the right side of regulators. Let's walk through it.

Step 1: Audit Your AI Tool and Its Training Data

Before you even think about generating an image, you have to pop the hood on your AI platform. The legal standing of your final ad is directly tied to the data that AI model was trained on. Just assuming a paid tool is "safe" is a massive, and potentially very expensive, gamble.

You need to ask your AI vendor some direct questions and really dig into their terms of service. Any reputable provider should be upfront about where their data comes from.

- Demand Data Transparency: Ask them flat out: Was your model trained on licensed, public domain, or ethically sourced data? If you get a vague, hand-wavy answer, that's a huge red flag.

- Check for an Indemnity Clause: Will the provider cover your legal bills if their tool spits out something that gets you sued? This is called indemnification. You'll find that many platforms put 100% of the legal risk squarely on you, the user.

- Look for "Commercially Safe" Tools: Some platforms specifically market their models as safe for commercial use. This is often a good sign, as it usually means they've done their homework on data rights.

Step 2: Secure Watertight Rights and Consent

This one is non-negotiable. If your ad involves a digital double of a real person—or even an avatar that looks a lot like someone—you need explicit consent. Your old-school model release form won't cut it here. Your agreements now need to be specific and think about the future.

The name of the game is informed consent. You have to be crystal clear that you intend to create and use an AI-generated version of a person, spelling out exactly how, where, and for how long. Any ambiguity in that contract is basically an open invitation for a lawsuit down the road.

This is more than just getting a signature; it’s about clear communication. A rock-solid AI release should cover all the bases to prevent future headaches.

- Specify AI Generation: The contract has to explicitly state that you have the right to create a "digital replica" or "AI-generated likeness."

- Define the Scope of Use: Get granular. Outline which platforms the AI actor will appear on, the types of campaigns, and the exact duration of use.

- Address Modification Rights: Does the contract let you tweak the AI's performance? Can you change its dialogue or drop it into entirely new scenes? Spell it out.

Step 3: Scrutinize Every AI-Generated Output

Once the AI creates something for you, the real work begins. Every single image, video, and voiceover needs a human review. This is your last line of defense against infringement, and you can't skip it.

Never assume that just because an image is "new," it's legally clean. These models can—and do—accidentally mimic protected styles, characters, or even brand logos.

- Check for 'Substantial Similarity': Does the output look or feel a little too close to a famous movie character, a piece of art, or a particular artist's signature style? If your gut says it's too close, it probably is. Ditch it and generate a new one.

- Scan for Trademarks: Look closely at the backgrounds. Did the AI sneak in a logo, a brand name, or a distinctive product shape?

- Listen for Vocal Likeness: If you're generating audio, does the voice sound eerily similar to a famous actor or public figure? That's a potential right of publicity violation waiting to happen.

Step 4: Implement Clear Disclosures and Substantiate Claims

Finally, you have to be honest with your audience and play by FTC rules. This means being transparent about using AI when it matters and, most importantly, backing up every claim your ad makes with cold, hard proof.

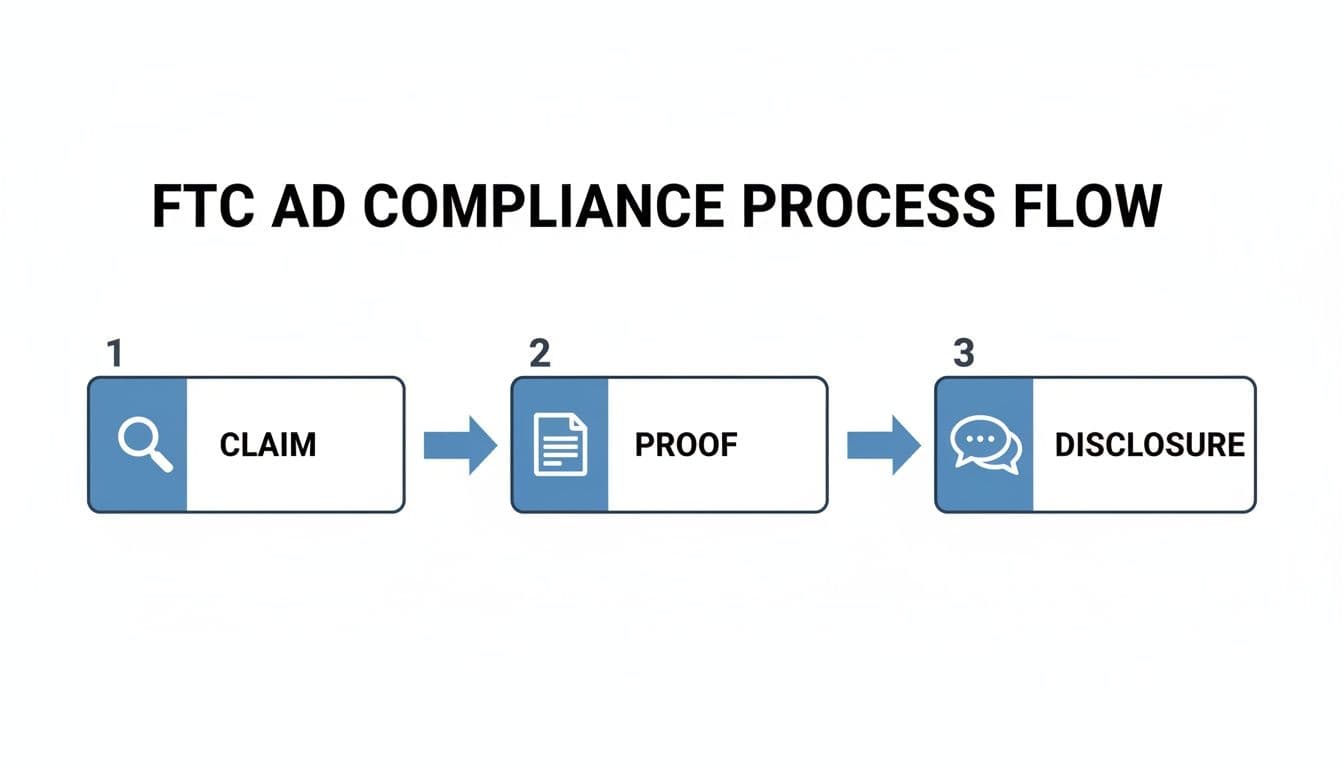

This simple flowchart breaks down the FTC's core expectation for any ad, AI-driven or not.

It’s a straightforward cycle: for every claim you make, you must have proof to back it up and provide a clear disclosure. This loop ensures your advertising is truthful and defensible.

- Disclose When It Matters: If a typical person might be tricked into thinking your AI actor is a real person—like a doctor giving medical advice or a customer raving about a product—you need to disclose it. A simple #AIGenerated or #AIAd can do the trick.

- Substantiate All Claims: Every single factual statement your AI actor makes needs evidence. If your AI spokesperson says a product is "50% more effective," you’d better have the clinical study to prove it before that ad sees the light of day.

- Build a 'Substantiation File': For every campaign, get in the habit of creating a file that contains all the data, studies, and evidence that support your ad's claims. If the FTC ever comes knocking, that file will be your best friend.

Common Questions About AI Actors in Advertising

Jumping into AI-powered advertising often raises more questions than answers. The legal side of things is still a bit like the Wild West, which can make it tough to feel confident in your decisions. Let's tackle some of the most common questions marketers are asking about the legal issues of AI actors in ads. My goal here is to give you straightforward answers so you can build smarter, safer campaigns.

Do I Need to Disclose That My Ad Uses an AI Actor?

More and more, the answer is yes. While there isn't one big federal law mandating this for every ad, the ground is definitely shifting. The best way to think about it is through the lens of the FTC's rules against deceptive practices. If not telling someone an actor is AI would mislead them, you absolutely have to disclose it. Think about using an AI-generated "doctor" to plug a health product—the fact that the doctor isn't real is a pretty big deal.

Besides, the platforms are already making the choice for you. Big players like Meta and YouTube now require labels for realistic AI-generated content, so disclosure is becoming a basic cost of doing business.

Here’s the simple rule I follow: If there's any chance a regular person could be fooled, add a clear and simple disclosure like #AIGenerated. This isn't just about following rules that might be coming down the pike; it’s about building trust with your audience, which is priceless.

Being upfront manages expectations and protects your brand, especially as people get savvier (and more skeptical) about synthetic media.

Can I Get Sued if My AI Tool Creates an Infringing Image?

You bet. A huge misconception is that if the AI tool makes the mess, the tool's provider is the one who has to clean it up. That's not how copyright law works. The company that publishes the infringing ad—meaning, your company—can be held directly liable. This is often called secondary liability, and it means you're sharing the legal risk.

Even if the AI platform also gets in trouble, that doesn't let you off the hook. This is why you have to read the terms of service for any AI tool you use. Some platforms might offer indemnification (meaning they’ll cover your legal bills), but many are written to put all the legal responsibility squarely on your shoulders as the user.

Your best defense is to be proactive. Scrutinize every single AI-generated image for any uncanny resemblances to existing copyrighted work. And try to stick with AI platforms that are transparent about where their training data comes from—those that use legally licensed or public domain data are always a safer bet.

What Is the Difference Between a Stock Photo and an AI Person?

The key difference boils down to where the legal rights come from. When you license a stock photo of a person, it comes with a model release. This is a legal document where the real human in the photo has given their consent for their likeness to be used commercially. It's your legal security blanket.

An AI-generated person doesn't have a model release because, well, they don't exist. But this opens up a whole new can of worms you don't have to worry about with stock photos.

- Accidental Doppelgänger: The AI image could randomly look just like a real person, creating a surprise right of publicity claim you never saw coming.

- Copyright Landmines: The image itself could be challenged based on what the AI was trained on. If the model learned from a database of protected images, your "original" AI person could legally be considered an infringing copy.

Essentially, with an AI person, you're swapping a known, clear legal process (model releases) for a new, murky set of risks tied to copyright and identity.

How Can I Safely Create an AI Brand Avatar?

If you want to create an AI brand avatar or influencer without getting a scary letter from a lawyer down the road, you need a plan. You can't just wing it.

Start by making sure the avatar is truly one-of-a-kind. Get creative with detailed prompts and then manually tweak the results to steer the final look far away from any real people or existing characters. Keep a record of this creative process—it could be crucial evidence to prove you intended to create something totally original.

Next, give your avatar a unique name and backstory that has no connection to a real person. Do a trademark search for the avatar's name and even its visual design to make sure you're not stepping on another brand's toes.

Finally, comb through your AI tool’s terms of service. You need to be sure they grant you full commercial ownership or, at the very least, a broad commercial license for anything you create. Being transparent with your audience that the avatar is AI will also go a long way in heading off both legal and PR problems.

Ready to create high-performing ads without the legal guesswork and high production costs? ShortGenius is an AI ad platform designed for creators and marketers who need to produce stunning short-form video and image ads for all major social platforms. Generate concepts, scripts, and visuals in seconds, then customize them with your brand kit and deploy campaigns that get results. Try ShortGenius today and start building better ads, faster.