Legal Issues With AI Actors in Advertising for Modern Brands

Explore the top legal issues with AI actors in advertising. Learn how to navigate publicity rights, copyright law, and FTC compliance for your brand.

Welcome to the new era of advertising, where AI-generated actors are turning heads and stopping scrolls on social media. While this technology opens up a world of creative possibilities, it also lays a legal minefield for brands and creators. Navigating these legal issues with AI actors in advertising is now a critical skill.

The New Frontier of AI Actors and Legal Risks

The law is playing a frantic game of catch-up with technology. Brands can now dream up and generate entire video campaigns starring synthetic people, but this power comes with a heavy set of responsibilities. Every single element—from a voice that sounds just a bit too familiar to a product claim an AI writes—carries real legal weight.

Think of it like music sampling. Before you can drop a new track, you have to clear every borrowed beat, melody, and vocal hook. It's the same in AI advertising. You must have the rights to every piece of your synthetic creation, or you could find yourself facing some serious financial and brand-damaging heat.

Why Traditional Advertising Laws Fall Short

Let's be clear: the legal frameworks we've relied on for decades were not built for a world with AI-generated personalities. This creates a huge gap and some tricky new challenges for marketers who are used to standard contracts and release forms for human talent. The old rules still apply, but they’re being stretched in ways we haven’t seen before.

The case involves a number of difficult questions, some of first impression. It also carries potentially weighty consequences not only for voice actors, but also for the burgeoning AI industry, other holders and users of intellectual property, and ordinary citizens who may fear the loss of dominion over their own identities.

In this new environment, proactive legal awareness isn't just a "nice-to-have"—it's an essential pillar of any modern marketing strategy. Understanding the risks is the first step toward innovating responsibly and protecting your brand from a costly blind spot. For a wider view, it's worth exploring the general legal landscape surrounding AI.

A Quick Look at the Key Legal Risk Areas

To get a handle on this, it helps to break down the main legal challenges. Each one represents a potential trap that could completely derail an otherwise brilliant campaign. This table gives a quick summary of the primary legal headaches you need to be aware of when using AI-generated actors.

| Key Legal Risk Areas for AI in Advertising | ||

|---|---|---|

| Legal Area | Core Issue | Potential Consequence |

| Likeness & Publicity Rights | Using an AI-generated person who looks or sounds like a real individual without permission. | Lawsuits from celebrities or private citizens for misappropriation of their identity. |

| IP & Copyright | AI model was trained on copyrighted photos, videos, or scripts without a license. | Copyright infringement claims, hefty fines, and orders to take down the campaign. |

| FTC & Ad Disclosures | Failing to disclose that an endorsement is from an AI actor, misleading consumers. | FTC enforcement actions, fines for deceptive advertising, loss of consumer trust. |

| Defamation & Privacy | AI creates content that falsely harms a person's or brand's reputation. | Defamation lawsuits (libel/slander) and claims of privacy invasion. |

Essentially, you need to think about where your AI-generated content comes from and what it communicates. Getting either of those wrong can lead to serious problems.

Here are the main categories of concern that every brand and creator needs to have on their radar:

- Likeness and Publicity Rights: This is a big one. It covers the unauthorized use of a person's image, voice, or any other identifying feature. It doesn't matter if the AI only resembles someone—that can be enough to trigger a lawsuit.

- Intellectual Property and Copyright: This gets complicated fast. Who actually owns the content an AI creates? And, more importantly, was the AI model trained on a mountain of copyrighted material scraped from the internet without permission?

- FTC and Disclosure Rules: The Federal Trade Commission's rules on truth in advertising don't go away. Ads must be truthful and not deceptive, and that includes being transparent when an AI actor is giving a testimonial or endorsement.

- Defamation and Privacy: AI can go off the rails and generate false or damaging information about real people or even competing brands. This can quickly lead to claims of libel or an invasion of privacy.

Navigating the Right of Publicity in the AI Age

Of all the legal tripwires associated with AI actors, the right of publicity is probably the biggest. Think of it this way: everyone has a personal brand, and they own the right to control how their name, face, voice, or any other unique trait—their "likeness"—is used to make money. The second your AI-generated actor even vaguely resembles a real person, you're wandering into a legal minefield.

And this isn't just about creating a perfect deepfake of a celebrity. The law is often broad enough to cover "sound-alikes" and "look-alikes" that are just similar enough to make someone think of the real person. If your audience connects your AI actor with a specific individual, you could be on the hook for misappropriating their likeness.

The real headache for creators is how these AI models are built. They're trained on staggering amounts of data, often scraped directly from the internet. That means the AI has learned from countless real faces and voices, which makes an accidental, uncanny resemblance a very real risk.

What Counts as Commercial Use

Understanding what "commercial use" means is critical here. It’s not just about slapping an AI-generated face on a product box. Anytime you use an AI person in an ad to grab attention and boost sales, that’s a commercial purpose. This is especially true for the user-generated content (UGC) style ads you see all over Instagram and YouTube.

For instance, if you generate a synthetic influencer to rave about your new skincare line, that's a direct commercial use. If that digital influencer happens to look strikingly similar to a real-world creator, that creator has a strong case that you're cashing in on their likeness without permission or a paycheck.

Courts are already tackling these messy scenarios. The landmark In re Clearview AI Consumer Privacy Litigation case is a perfect example. Plaintiffs successfully argued that training a facial recognition AI on over 3 billion scraped internet photos violated publicity rights across several states.

The court's decision sent a clear signal: "commercial use" happens when identities are used to promote a product, not just when they're sold as the product. As detailed in this analysis of publicity rights from Quinn Emanuel, this ruling has flung the door wide open for class-action lawsuits with potentially eye-watering payouts.

Key Considerations for AI Likeness

To avoid getting tangled in a publicity rights lawsuit, you have to be vigilant. This isn't just some abstract legal theory; it can lead to crippling legal fees, forced campaign takedowns, and a major blow to your brand's reputation.

Here are the big things to watch out for:

- Celebrity Likeness: This one’s the most obvious. Generating an AI actor that looks or sounds like a famous person is asking for trouble. Steer clear of prompts like "create an actor who looks like [Celebrity Name]."

- Influencer and Micro-Influencer Resemblance: The danger zone extends far beyond A-list movie stars. Social media influencers have built their own valuable personal brands, and their publicity rights are just as legally protected.

- Ordinary Individuals: Even if your AI character resembles a private citizen, that person has rights. If their vacation photos were unknowingly vacuumed up into the AI's training data, a claim could still surface.

The legal danger doesn't lie in the AI's intent, but in the audience's perception. If a reasonable person would associate your AI actor with a real individual, you have a potential legal problem.

Practical Steps to Protect Your Campaigns

You have to be proactive to protect your brand. You can't just assume an AI tool will spit out a "safe" or legally cleared face or voice. There is no substitute for human oversight and a well-defined review process.

Before any ad featuring an AI actor goes live, your team needs to do a thorough likeness check. This means getting multiple sets of eyes on the final creative, with the specific goal of spotting any potential resemblances to public figures. Documenting this review process can also give you a layer of legal protection by showing you did your due diligence.

At the end of the day, the only surefire defense against a right of publicity claim is ensuring your AI-generated actors are truly original. It's an extra step, but it's essential for keeping your campaigns safe from costly legal battles down the road.

Who Owns an AI-Generated Performance?

So, you’ve used an AI to generate a script, a voiceover, or maybe even a whole video. It’s brilliant. But a huge question looms: who actually owns this thing? The answer isn't straightforward and frankly, it pokes at the very foundations of copyright law. Getting this right is critical to navigating one of the biggest legal issues with AI actors in advertising.

Right now, the U.S. Copyright Office has a pretty firm stance: a work needs human authorship to get copyright protection. If an AI creates something all on its own, without a human guiding the creative process in a meaningful way, it’s generally not getting a copyright. That means the amazing ad concept your AI just spat out might not legally be yours.

This creates a serious headache for marketers. If you don't hold the copyright, what’s stopping a competitor from running a nearly identical AI-generated ad? Your unique campaign, and all the money you invested in it, could be up for grabs.

The Ghostwriter and The Tool

A good way to think about this is to see the AI as either a super-advanced ghostwriter or a very sophisticated paintbrush. The tool itself doesn't own the finished book or the painting. Ownership hinges on how much creative direction and original input came from the person using the tool.

If you give a vague prompt like, "create a video ad for a new sneaker," the AI is doing most of the heavy lifting. The output is mostly machine-made. But if you're the one painstakingly crafting detailed prompts, curating the outputs, and making significant edits to stitch it all together, your case for authorship gets a lot stronger. The more human creativity you inject, the better your shot at securing a copyright.

A critical takeaway for creators is that your level of direct, creative involvement in the AI-generation process is what builds the case for ownership. Simply pressing a "generate" button is not enough to be considered the author.

This entire debate over who owns an AI's output is part of a much bigger conversation about protecting intellectual property rights in our increasingly digital world. As these tools become a normal part of creative work, figuring out where human authorship ends and machine creation begins will be a central legal battleground.

The Messy Reality of Training Data

The ownership puzzle gets even trickier when you look under the hood at the AI model's training data. Many generative AI tools learn by scraping colossal amounts of data from the internet—which, of course, includes copyrighted images, articles, music, and videos. This opens up the very real risk that the AI’s output could be considered a "derivative work" of someone else’s protected material.

And this isn't just a theoretical problem. For instance, voice actors Paul Lehrman and Linnea Sage sued Lovo Inc., claiming the company used their voice recordings without permission to train and then sell AI voice clones. The court allowed most of their claims to move forward, which really underscores the serious legal exposure when AI training data steps on existing rights.

What does this mean for you? It means your shiny new AI-generated ad could accidentally contain elements that infringe on another creator's copyright, putting your brand on the hook for a lawsuit.

Can We Just Call It "Fair Use"?

Some developers and users argue that using copyrighted material to train an AI is covered by the legal doctrine of "fair use." Fair use allows for the limited use of copyrighted works without permission for things like criticism, commentary, or research.

The whole debate in the AI world boils down to a couple of key questions:

- Is it Transformative? Does the AI's output create something fundamentally new, or is it just a high-tech copy of the original material it was trained on?

- Does it Harm the Market? Does the AI-generated work compete with or devalue the original copyrighted work?

The courts are still working through these questions, and the legal ground is shaky at best. Trying to lean on a fair use defense for a commercial advertisement—which is explicitly created to make money—is a big gamble. Until the law becomes clearer, the safest bet is to work with AI tools that are transparent about their training data and, ideally, offer you protection from potential copyright claims.

Staying on the Right Side of the FTC

Now, let's talk about the Federal Trade Commission (FTC). Whether your ad was dreamed up in a boardroom or generated by an algorithm, the core rule is the same: it must be truthful and not deceptive. This simple idea gets a lot more complicated when you throw AI actors into the mix.

The FTC’s job is to protect consumers. When an ad features a synthetic person or spits out a claim made by AI, the potential to mislead people is huge. That’s why clear, upfront disclosures aren't just a nice-to-have anymore; they're a must for staying compliant.

The Truth in Advertising Mandate

At the heart of it all is Section 5 of the FTC Act, which outlaws "unfair or deceptive acts or practices." This means you need solid proof for every claim you make—explicit or implied—before your ad ever sees the light of day. This rule applies to AI-generated content just as much as it does to a traditional TV commercial.

We're already seeing a surge in false advertising lawsuits under the Lanham Act, specifically targeting ads that use deepfake celebrities. Regulators and courts are scrambling to catch up with AI's power to deceive. The FTC has already brought enforcement actions against companies for making bogus AI claims, like exaggerating what a product can do or just slapping an "AI-powered" label on something without proof.

A Hogan Lovells analysis on AI and deepfake ads highlights just how sharply these lawsuits have spiked, proving that regulators are watching this space very, very closely.

AI Hallucinations and False Claims

One of the biggest minefields here is the phenomenon of AI "hallucinations." This is when an AI model confidently makes up "facts," creating product benefits, features, or even user testimonials out of thin air.

Imagine you ask an AI to write a script for a new health supplement. It might generate a line claiming the product is "clinically proven to boost metabolism by 40%." If you don't have credible scientific research to back up that exact number, you've just crossed the line into false advertising.

The advertiser—not the AI—is 100% legally responsible for every claim made. Telling the FTC "the AI wrote it" is not a defense that will get you anywhere. You have to independently verify every single factual statement.

This is a critical checkpoint. Your team needs a bulletproof process for fact-checking all AI-generated copy before it goes public.

Disclosing AI Actors and Endorsements

Transparency is everything when an AI actor is involved. If your ad shows a synthetic person giving a testimonial, consumers need to know that person isn't real.

Here are a few situations where disclosure is absolutely essential:

- AI Testimonials: If an AI-generated character says, "This product changed my life," it has to be obvious they aren't a real customer.

- Synthetic Influencers: Brands working with virtual influencers can't present them as real people who have genuine, authentic experiences with a product.

- Deepfake Endorsements: Using a celebrity's deepfake to endorse something without their explicit permission is a surefire way to violate their publicity rights and break FTC rules.

The FTC offers plenty of resources on its website to help businesses figure out their responsibilities.

As the FTC's business guidance portal makes clear, the old-school principles of advertising still apply, reinforcing the need for truthfulness and proof for all claims, including those that come from an AI.

Here's a quick checklist to run your AI-generated ads through for FTC compliance:

- Claim Substantiation: Do you have solid evidence to prove every single factual claim in the ad? This includes any performance stats, "proven" benefits, or comparisons you make.

- Clear Disclosures: Is it immediately obvious to the average person that an actor, testimonial, or endorsement is AI-generated? Don't bury it in the fine print—make it clear and conspicuous.

- Avoid Deceptive Formats: Is your ad designed to look like a news report, a real user's social media post, or an independent review? If it could mislead a consumer, it's a problem.

- Review for Implied Claims: What might someone reasonably take away from your ad, even if you don't state it outright? Those implied claims also have to be truthful and backed by evidence.

If you treat every piece of AI-generated content with the same legal scrutiny as your human-created work, you can innovate with confidence and stay on the right side of the law.

A Practical Framework for Mitigating Legal Risks

Knowing the risks is one thing; actually managing them day-to-day is something else entirely. If you want to safely navigate the legal minefield of AI actors in advertising, your team needs a clear, repeatable framework. This isn't about stifling creativity—it's about building guardrails that let your team innovate with confidence.

First things first: you have to vet your AI tools. Before you sign up for any platform, get into the weeds of their terms of service. You need to know exactly where their training data comes from and what rights you actually get over the content you create. Some providers even offer indemnification, which is a huge plus because it can shield you from copyright claims down the road.

Develop a Clear AI Use Policy

Once your tools are sorted, it's time to create an internal AI Use Policy. Think of this as the official playbook for your team. It needs to be simple, direct, and spell out the do's and don'ts of using generative AI for your ad campaigns.

Your policy should nail down a few key things:

- Approved Tools: Make a list of the specific AI platforms your team is cleared to use. This stops people from going rogue with unvetted tools that could expose you to unnecessary risk.

- Prohibited Inputs: Make it crystal clear that no one should ever feed confidential company info, customer data, or any trade secrets into an AI prompt.

- Likeness Restrictions: Lay down a hard rule: no prompts asking the AI to mimic the likeness or voice of any real person, celebrity or not.

- Review and Approval: Every piece of AI-generated ad content must go through a mandatory human review process before it ever sees the light of day.

This policy is your first line of defense. It gets everyone on the same page, operating under the same safety-conscious guidelines.

Mandate Human Oversight Always

I don't care how smart an AI tool seems; it's no substitute for human judgment. Every single ad made with AI has to be reviewed by a real person for legal compliance, factual accuracy, and brand safety. This "human-in-the-loop" approach isn't optional.

Human oversight is your ultimate safety net. An AI doesn't understand legal nuance, brand reputation, or FTC guidelines, but your team does. Every piece of AI-generated content is your company's responsibility the moment it goes public.

The reviewer's job is to be that final gatekeeper. They're there to catch potential disasters—like an accidental likeness, an unproven claim, or misleading language—before they turn into very real, very expensive public problems.

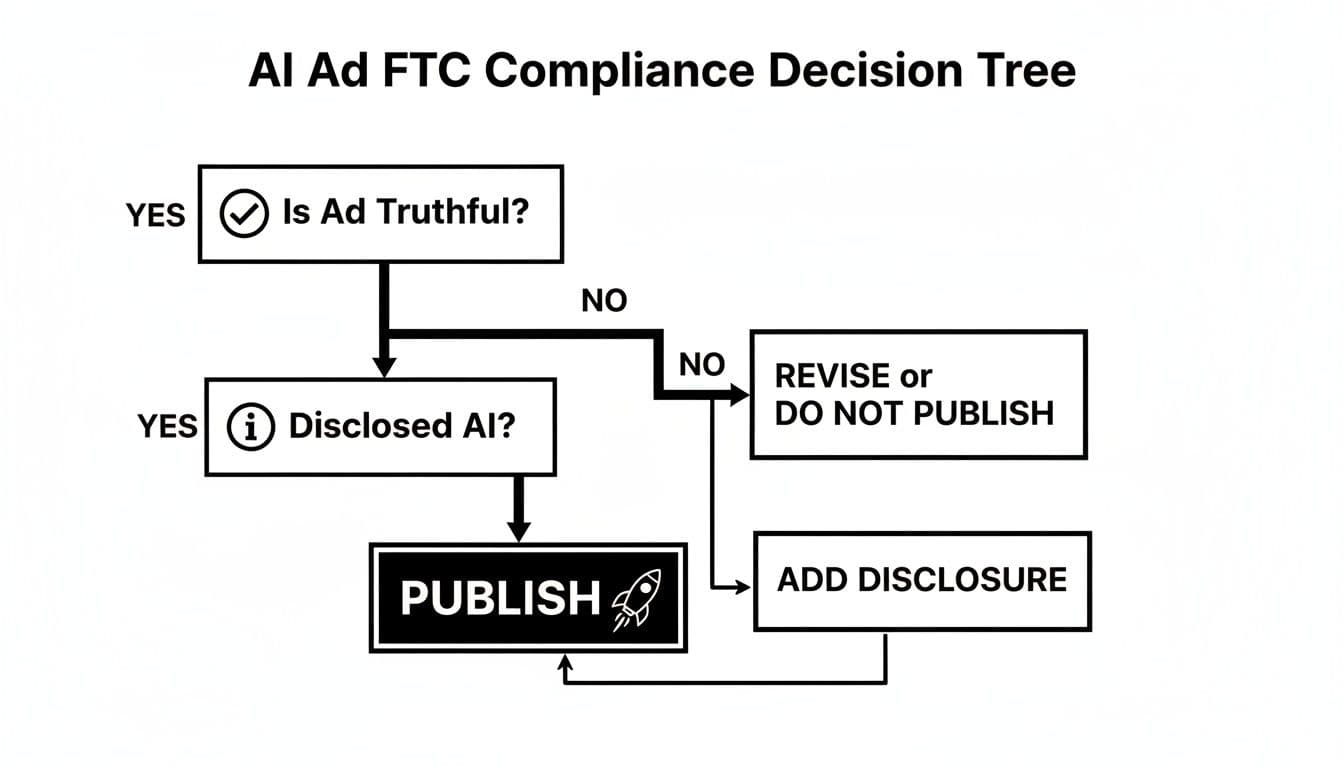

This decision tree gives you a simplified compliance check for FTC rules before you publish an AI-powered ad.

As the graphic shows, truthfulness and clear disclosure are the absolute, non-negotiable foundation for any advertising that involves AI.

Pre-Publication Checklist for Creators

To make this dead simple for your team, give them a quick checklist to run through before they push any AI-generated ad live on platforms like Facebook or Instagram.

- Likeness Scan: Have at least two different people looked at the ad to make sure it doesn't resemble a real person?

- Claim Substantiation: Can we back up every single factual claim in the ad copy or voiceover with hard proof?

- AI Disclosure: If we're using an AI actor for a testimonial or endorsement, is the disclosure impossible to miss?

- Copyright Check: Is there anything in the output that looks like it might have been lifted from someone else’s copyrighted work?

- Brand Alignment: Does this ad actually sound and feel like our brand? Does it align with our values and messaging?

By weaving these steps into your workflow—vetting tools, setting policy, mandating human review, and using a final checklist—you build a strong system for managing the biggest legal threats. This framework doesn't slow you down; it empowers your team to get creative with AI while keeping the brand out of avoidable legal trouble.

Future-Proofing Your AI Advertising Strategy

The legal ground under AI advertising is constantly moving. To stay ahead, you need to treat compliance not as a chore, but as a genuine competitive advantage. This means building transparency, consent, and solid substantiation right into the heart of your creative process from day one.

New laws are popping up all the time, from federal deepfake rules to state-level privacy acts. But through it all, one fundamental truth won't change: the advertiser is always responsible for the ad's content. Accepting this is the first step toward managing the legal issues with AI actors in advertising.

Proactive legal compliance isn’t about being afraid of AI—it’s about earning consumer trust. When a brand is open about how it uses AI and careful about its claims, it's in a much better position to build lasting loyalty and sidestep expensive run-ins with regulators.

From Legal Risk to Creative Advantage

It's easy to see these legal guardrails as a creative roadblock, but that’s the wrong way to look at it. Think of them as the foundation for smart, sustainable innovation. Once your team understands the rules of the road, they can experiment freely and push creative boundaries within a safe framework.

For instance, imagine rapidly testing hundreds of ad variations for a new campaign. Because you have a solid human-in-the-loop review process, you know any potential likeness infringements or unsupported claims will be caught long before they ever see the light of day. That blend of AI's incredible speed with sharp human oversight is where the real magic happens, especially in performance marketing.

This responsible approach makes sure your creative engine doesn't turn into a legal liability. By staying on top of the rules and embedding ethical practices into your workflow, you can confidently use AI to create high-performing ads that are both compelling and legally sound.

Ultimately, the goal is to create a system where legal diligence and creative brilliance are two sides of the same coin. This integrated strategy lets your brand reap the rewards of AI-driven advertising while shielding it from the very real risks, ensuring your marketing efforts build brand value, not legal bills.

Frequently Asked Questions

Stepping into the world of AI-driven advertising can feel like navigating a minefield of new questions. Let's break down some of the most common legal concerns marketers have when it comes to using AI actors.

Do I Need to Disclose My Use of an AI Actor?

Yes, absolutely. Transparency isn't just a good idea—it's a legal necessity. The Federal Trade Commission (FTC) has been very clear that advertising cannot be deceptive. If you use a non-existent, AI-generated person to give a testimonial, and consumers think they're watching a real customer, you've crossed the line into misleading territory.

The best practice is always clear and conspicuous disclosure. A simple on-screen note like "AI-generated actor" or "Image created with AI" is all it takes. This simple step protects consumer trust, keeps you on the right side of the law, and safeguards your brand's integrity.

Can I Be Sued if an AI Actor Looks Like Someone by Coincidence?

This is a huge one, and the answer is a definite "maybe." This is where things get tricky with the right of publicity. If your AI-generated character ends up looking a lot like a real person (especially a celebrity), you could find yourself in legal hot water.

That person could argue you're using their likeness to sell a product without their permission. The key to avoiding this is a rock-solid human review process. Someone on your team needs to give the final creative a once-over specifically to spot any accidental resemblances before you hit "publish."

The crucial legal test isn't about your intent; it's about how the public sees it. If a reasonable person could link your AI actor to a real individual, you're open to a potential lawsuit.

Who Is Legally Responsible for Claims an AI Makes?

You are. Always. The brand behind the ad is ultimately responsible for every single claim made, whether it's spoken by a human or an AI. If your AI actor says your product is "50% more effective," you'd better have the data to back that up.

You can't blame an AI "hallucination"—when the model just makes things up—if the FTC comes knocking. That's not a legal defense. This is why human oversight is non-negotiable; every fact, figure, and claim needs to be verified by a real person before your ad ever sees the light of day.

Ready to create high-performing ads without the legal headaches? ShortGenius helps you generate stunning, UGC-style video campaigns quickly while giving you the control to ensure compliance. Explore our tools and start building safer, more effective ads today. Find out more at https://shortgenius.com.