Top Guide: best way to test multiple ad creatives with ai

Explore the best way to test multiple ad creatives with ai and learn a scalable, data-driven workflow for optimizing ad tests from start to finish.

The smartest way to test a bunch of ad creatives with AI is to build a solid, repeatable workflow. It's about combining rapid-fire, AI-generated ideas with a structured, data-driven testing plan. This whole approach takes us way beyond the slow, clunky A/B tests of the past, letting you test hundreds of variations at once to get performance insights much faster.

Moving Beyond Manual Ad Creative Testing

Let's be real for a second: the old way of testing ad creative is completely broken. For years, the standard playbook was to run a simple A/B test. You'd pit one ad against another, wait weeks for the data to trickle in, and then make a call based on a pretty small sample size. That process was always slow and ate up a ton of resources, and it just can't keep up anymore.

If you're still manually creating two ad versions, sticking them in different ad sets, and just hoping for a clear winner, you're leaving a lot of money on the table. This method is often riddled with problems. Ad platforms might spend the budget unevenly, your audiences could overlap, and more often than not, the results are just inconclusive. You end up spending way too much time on the setup and not nearly enough on the actual strategy.

The Shift to Scalable AI Workflows

The fix isn't just about doing A/B tests faster; it's about a complete mindset shift. The best way to test multiple ad creatives with AI involves overhauling your entire process. Instead of thinking in terms of one or two variations, you need to start thinking in terms of dozens—or even hundreds. This is exactly where platforms like ShortGenius completely change the game.

Modern AI tools give marketers the power to:

- Generate Variations Instantly: In just a few minutes, you can create tons of versions of a single ad by swapping out different hooks, visuals, calls-to-action, or even voiceovers.

- Build a Test Matrix: You can set up tests in a structured way to isolate specific creative elements. This helps you figure out what really drives performance. Is it the hook? The visual? The offer?

- Launch with Confidence: By using a single ad set, you ensure the budget is split fairly across all variations, giving you clean data and letting the ad platform’s algorithm do its job and find the winners.

This AI-powered method absolutely crushes manual testing when it comes to speed, scale, and—most importantly—results. We've seen industry data showing that AI-optimized creatives can pull in up to two times higher click-through rates compared to ads that were designed by hand.

The goal is no longer just to find a single winning ad. The goal is to build a continuous optimization engine that constantly feeds your campaigns with fresh, high-performing creative based on real-time data.

AI-Powered vs Manual Ad Creative Testing

When you put a manual process side-by-side with an AI-driven one, the difference in efficiency and potential is night and day. We're not just talking about a small improvement; it’s a whole new way to think about performance marketing. If you want to see these ideas in action, check out how to create high-performing, AI-generated UGC ads.

Here’s a quick breakdown of how the two approaches stack up:

| Aspect | Manual Testing | AI-Powered Testing |

|---|---|---|

| Speed | Slow; days or weeks to get results from a single test. | Rapid; identify winning elements within hours or days. |

| Scale | Limited to testing 2-4 variations at most. | Nearly unlimited; test hundreds of variations simultaneously. |

| Data Quality | Prone to skewed results from uneven budget spend. | Clean data from fair budget allocation in a single ad set. |

| Insights | Identifies a "winning ad" with limited context. | Reveals winning elements (hooks, visuals, CTAs) for future ads. |

| Resources | High; requires significant manual effort from designers and copywriters. | Low; AI handles the heavy lifting of creative generation. |

By making this shift, you're moving from a reactive "guess and check" model to a proactive, strategic one. Instead of just hoping something will work, you're systematically finding out what actually works. This allows you to make smarter, data-backed decisions that drive real growth—and it sets the stage perfectly for the specific strategies we're about to dive into.

Setting Up Your AI Experiment for Success

You can't just throw a bunch of AI-generated ads at the wall and hope something sticks. The secret to getting real results from AI creative testing is having a solid plan from the start. This means building a structured experiment that’s designed to give you clear, actionable insights—not just a mountain of confusing data. It’s about moving past vague goals like “let’s test a new video” and getting more scientific.

The whole thing starts with a strong creative hypothesis. This is just a simple, testable statement that connects a specific creative choice to a result you expect to see.

For example, instead of just running a generic test, you might form a hypothesis like this: "We believe a user-generated content (UGC) style hook will beat a direct product demo on TikTok because it feels more native to the platform, which should lead to a higher click-through rate (CTR)."

See the difference? That simple shift forces you to think strategically about why you're running the test, giving the entire experiment a clear purpose right out of the gate.

Isolate Your Variables for Clearer Insights

Once your hypothesis is locked in, the next move is to figure out exactly what you're testing. If you change the hook, the visuals, the copy, and the call-to-action all at once, you’ll have no idea which change actually made a difference. You have to be methodical.

Decide if you need a simple A/B test or something more complex, like a multivariate test.

- A/B Testing: This is your go-to for testing big, bold ideas. Think of it as comparing two completely different concepts. For instance, you could pit a polished, studio-shot ad against a raw, behind-the-scenes video to see which one resonates more.

- Multivariate Testing: This is what you use when you want to refine a concept that's already working. Here, you're testing multiple combinations of smaller elements—maybe three different headlines, two images, and two calls-to-action—to pinpoint the absolute best-performing combination.

As you build out your testing plan, it's smart to know about the best AI tools for marketers available. Many of these platforms are built specifically to handle the complexities of multivariate testing, which can make managing and analyzing all those creative variations much easier.

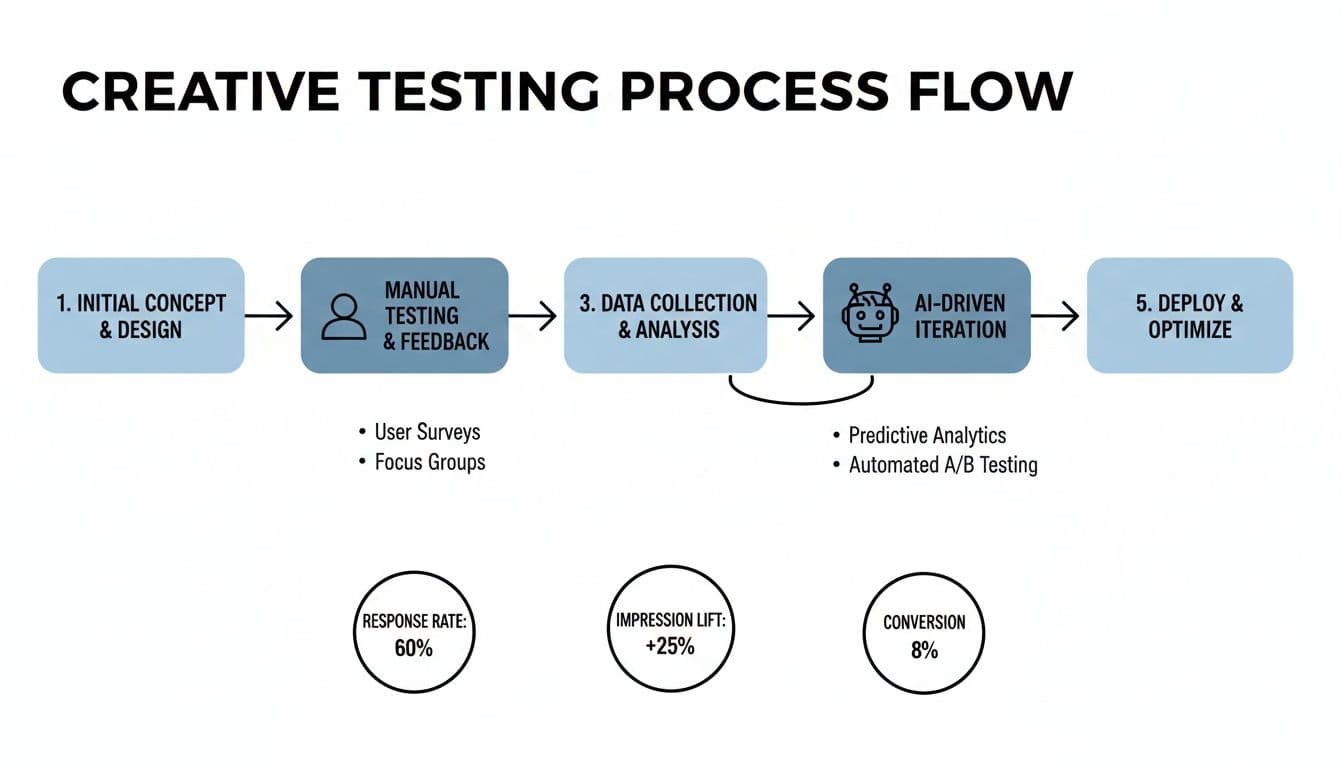

This process flow shows just how different a manual workflow is from one powered by AI.

As you can see, the AI approach automates the tedious parts—like creating variations and analyzing results—turning it into a continuous optimization loop that manual methods just can't keep up with.

Define Your Success Metrics Before You Start

A test without clear key performance indicators (KPIs) is just a guess. Before you spend a single dollar, you need to define what a "win" actually looks like. Is your main goal to lower your Cost Per Acquisition (CPA), boost your Return on Ad Spend (ROAS), or just get a better CTR?

Your primary KPI has to align with your overall business goal for the campaign. If you’re running a direct-response campaign, ROAS is almost always the king. But for a top-of-funnel brand awareness push, you might care more about metrics like Video View Rate or Engagement Rate.

Think about social media ads. The hook is everything. You have less than three seconds to grab someone's attention before they scroll on by. This is where AI really shines. It can spin up dozens of different hooks in minutes—from asking a provocative question to using a surprising visual cut—letting you scientifically test which approach is the best scroll-stopper.

The most effective AI testing frameworks are built on a simple cycle: hypothesize, isolate, and measure. This structure transforms creative testing from a budget-draining guessing game into a predictable engine for growth and learning.

By pairing a solid hypothesis with isolated variables and clear KPIs, you create a powerful feedback loop. For example, our AI-powered text-to-image models can generate tons of visual styles for a single product. Your framework would let you test whether a photorealistic style, an illustration, or an abstract image drives the best ROAS. That’s how you get concrete data to guide your next creative brief. This disciplined approach guarantees that every ad you run teaches you something valuable.

Generating Ad Variations at Scale With AI

Alright, with a solid experiment framework in hand, it's time for the fun part. This is where we let the AI loose and watch it turn our hypotheses into a whole library of ads ready for testing. The real magic of testing multiple ad creatives with AI is just how fast you can go from an idea to a full-blown campaign.

Think about one of your core product benefits, something like "saves users an average of five hours per week." In the old days, turning that single concept into a full set of test ads would have been a week-long project for a creative team. Now, with a platform like ShortGenius, you can feed it that one benefit and get back ten unique video scripts, five different UGC-style scenes, and four distinct voiceovers—all in just a few minutes.

This isn't about creating volume for the sake of it. We're building a strategic arsenal. The whole point is to have enough variations to systematically swap out individual elements, so you can finally get concrete answers about what actually moves the needle.

Building Your Creative Test Matrix

A test matrix is your roadmap for this whole process. It's just a simple, organized way to map out all the different combinations of creative elements you plan to test. You'll build this directly from the variables you identified in your hypothesis.

For a video ad, a straightforward matrix might look something like this:

| Hook (Variable A) | Visual Style (Variable B) | Call-to-Action (Variable C) |

|---|---|---|

| Hook 1: Question | Visual 1: Product Demo | CTA 1: "Shop Now" |

| Hook 2: Statistic | Visual 2: UGC Testimonial | CTA 2: "Learn More" |

| Hook 3: Bold Claim | Visual 3: Animation | CTA 3: "Get 20% Off" |

Look at that. With just three options for each element, you've suddenly created 27 unique ad combinations (3x3x3). Trying to produce all of those by hand would be a nightmare. But using an AI ad generator lets you piece these together almost instantly, filling out your test matrix with assets that are ready to go live.

Using AI Features to Multiply Your Variables

Don't just stop at swapping out the big things like hooks and CTAs. The really good AI platforms come with features that can act as their own testable variables. These are the little things that can make someone stop scrolling and pay attention.

Try layering some of these AI-powered features into your tests:

- Scroll Stoppers: Think of these as quick visual jabs at the very start of a video. You could test a version with a "glitch" effect against one with a big, animated headline. The goal is to see which one does a better job of breaking that mindless scroll pattern.

- Camera Movements: AI can inject life into static images or boring clips by adding dynamic effects like a slow zoom or a dolly shot. Pit a static image against one with a subtle "push-in" effect and see if that little bit of motion keeps people watching longer.

- Surreal Effects: If your brand has a more creative or edgy vibe, AI-generated surreal effects can be a game-changer. Why show a standard product shot when you can test it against an image where the product is floating in some wild, dreamlike environment?

The single biggest advantage AI gives us here is the power to deconstruct what makes a creative work. You're no longer just hoping to find a winning ad; you're pinpointing the winning ingredients. That knowledge becomes your playbook for every campaign you run from now on.

By methodically testing hooks, visuals, CTAs, and even subtle AI effects, you’re gathering hard data on what your audience truly cares about. This is how you shift your marketing from a series of educated guesses to a cumulative learning system. To really expand your creative toolkit, exploring powerful AI ad creative generators can give you even more diverse assets to populate your test matrix.

An approach like this ensures you're never short on fresh, high-potential creatives, giving you a serious edge in any ad environment.

How to Launch and Monitor AI Test Campaigns

Alright, you've got your AI-generated ads and a solid test matrix. Now comes the moment of truth: launching the campaign. How you structure this launch on platforms like Meta or TikTok is just as important as the creatives themselves. A sloppy setup can contaminate your data from day one, leading you to make bad decisions based on faulty signals.

The name of the game is giving every creative a fair fight. In the old days, we’d meticulously build a separate ad set for each ad variation. That's a recipe for audience overlap and skewed budgets. The modern, and frankly much smarter, approach is to put all your test creatives into a single ad set. This ensures every ad competes for the same audience, under the exact same conditions.

Letting the Algorithm Find Winners for You

To make this work, Campaign Budget Optimization (CBO) is your best friend. Seriously. By setting one budget at the campaign level, you're handing the keys to the platform's algorithm. It will automatically start funneling money toward the ads that perform best.

This is a complete shift from manually trying to pick winners based on a hunch. The machine learning is designed to spot early performance signals and quickly divert spend to the creatives that are actually hitting your KPI, whether that's ROAS or CPA.

Think about it: platforms like Meta and Google are built on complex algorithms that thrive on data. When you feed them a diverse set of creatives, you’re giving them more chances to find that perfect combination of ad, person, and placement. This "creative optimization" has been shown to drop CPA by as much as 32% as the platform learns what visuals and messages resonate with different segments of your audience. You can dig deeper into driving performance with creative diversity directly on Meta's learning center.

Making Sense of the Numbers

As the results start trickling in, it's tempting to get excited about an ad with a low CPA after 24 hours. But is it a true winner or just a flash in the pan? This is where understanding statistical significance saves you from making premature calls. It’s a fancy term for a simple concept: how confident are you that the results aren't just random luck?

You don't need a degree in statistics, just a basic framework.

- Know Your Confidence Level: In most cases, you should be aiming for 90% to 95% confidence. This means you're almost certain the performance difference you're seeing is real.

- Use a Calculator: Don't do the math yourself. There are plenty of free online A/B testing calculators that will do the heavy lifting. Just plug in your numbers—impressions, clicks, conversions—for each ad, and it will spit out the confidence level.

- Wait for Volume: This is critical. Don't call a winner after 10 conversions. You need enough data for the results to mean something. A solid rule of thumb is to wait for at least 100 conversions per variation, though this can change based on your business.

Don't be too quick to pull the plug on a creative just because it's off to a slow start. Ad performance ebbs and flows. Let the test run its course until you have the data to back up your decision. Patience prevents you from killing a potential winner before it has a chance to shine.

Setting Budgets and Timelines

So, how much should you spend, and for how long? Two of the most common mistakes I see are running tests with too little budget or cutting them off too soon.

A disciplined test needs a proper runway.

- Test Duration: Give your test at least 4-7 days. This gives the platform's algorithm enough time to get out of its "learning phase" and allows you to see how performance stabilizes across different days of the week.

- Budget Allocation: A great starting point is to budget for at least 50-100 conversions per creative variation. For example, if your target CPA is $20 and you're testing five creatives, your minimum test budget should be around $5,000 ($20 CPA x 50 conversions x 5 variations).

Following this approach ensures your AI-powered tests produce clean, reliable data. It’s the foundation you need to scale your winning ads with total confidence.

Analyzing Results and Scaling Your Winners

Alright, your tests have run, the data is rolling in, and now comes the most important part: turning those numbers into actual profit. This is where the real magic of AI-powered creative testing happens. It’s not just about finding a single "winner." It's about figuring out why it won.

Effective analysis means going beyond the obvious metrics. You have to dig in and find the specific elements that made an ad connect. Was it a certain hook that made people stop scrolling? Did a particular visual style consistently crush the others? Or was a specific call-to-action the secret sauce for driving conversions? Nailing these components is how you create a repeatable formula for success.

From Winning Ads to Winning Ingredients

When you find a top-performing ad, the temptation is to just crank up the budget and move on. Don't do it. Instead, think of that ad as a blueprint for what comes next. Your job now is to isolate the parts that resonated and figure out how to replicate that success at scale.

This is how you build a powerful, continuous optimization loop. Let's say your data shows that a user-generated content (UGC) style hook absolutely killed it, driving a huge click-through rate (CTR) no matter what visual you paired it with.

Your next move becomes obvious:

- Isolate the Winner: Grab that high-performing UGC hook.

- Generate New Variations: Fire up a tool like ShortGenius and have it create ten slight variations of that exact hook.

- Combine and Test Again: Pair those fresh hooks with other promising elements from your first test, like different product shots or offers.

This approach transforms your ad strategy from a bunch of one-off bets into a system that gets smarter with every test. Each cycle builds on the last, systematically improving your creative performance over time.

The most profitable insights don't come from finding one lucky ad. They come from understanding the DNA of that ad—the hook, the pacing, the message—and using AI to clone that DNA into a new generation of high-potential creatives.

The Art of Scaling and Refreshing Creative

Once you've identified your winning elements, you can start scaling your campaigns with real confidence. This typically means gradually boosting the budget on your top ads while you phase out the ones that fell flat. But scaling isn't a "set it and forget it" game. The biggest killer here is ad fatigue.

Ad fatigue is a real problem, and it's expensive. I've seen great creatives lose as much as 50% of their effectiveness in just 7-10 days. This is where AI platforms are a game-changer. Instead of watching a winning ad burn out, you can rapidly refresh it by swapping a few scenes, changing the voiceover, or updating the text overlays. This extends its profitable lifespan without having to go back to square one. You can read more about how to combat ad fatigue with fresh creative on revealbot.com.

Building a Long-Term Creative Strategy

The best way to approach AI-powered creative testing is to treat it as an ongoing strategy, not a one-time project. The insights from every test should feed directly into your next creative brief, influencing everything from scripts to visual styles.

Here’s what that looks like in the real world:

- Create a "Creative Playbook": Start documenting what works. For example: "For our TikTok audience, hooks that ask a direct question outperform bold statements by 30%." This becomes your team's single source of truth.

- Automate Your Winners: Use tools like ShortGenius to automatically publish refreshed versions of your winning ads on a set schedule. This keeps your campaigns from going stale.

- Reallocate Resources Smartly: Instead of constantly trying to come up with brand-new ideas from a blank page, shift your team's creative energy toward iterating on proven concepts.

This strategic approach ensures every dollar you spend on testing delivers a return—not just in immediate sales, but in valuable, long-term creative intelligence. It’s how you stop guessing what works and start knowing.

Common Questions About AI Ad Creative Testing

Jumping into AI-powered creative testing is exciting, but let's be real—it brings up a lot of practical questions. Any time you switch up your workflow, you're bound to hit a few snags. The good news is, most of them are pretty easy to sort out.

Let's walk through some of the most common questions I hear from marketers as they move toward a smarter, more automated way of testing their ads.

This isn't some fringe idea anymore. Generative AI is quickly becoming standard practice in marketing. In fact, as of early 2024, nearly 29% of marketers were already using it in their day-to-day work. Why? Because it absolutely slashes the time and money it takes to create content, making a robust testing strategy accessible to pretty much everyone. You can dig into more of the numbers on AI's impact on ad performance statistics over at amraandelma.com.

Nailing these details down early on will give you the confidence to really lean into an AI-first creative strategy.

How Much Should I Budget for Testing?

This is the big one, and the honest answer is: it depends. There's no magic number. A solid rule of thumb I've always followed is to set aside 10% to 20% of your total ad spend just for experimentation. This gives you a dedicated sandbox to learn and innovate without messing with the campaigns that are already printing money.

If you want to get more specific, you can work backward from your main KPI.

- What's your goal? You’ll want to aim for at least 50-100 conversions for each creative you're testing to get a clear, statistically significant read.

- Do the math: Just multiply that conversion target by your target Cost Per Acquisition (CPA).

- There's your budget: For example, if your target CPA is $30 and you're testing five different ad variations, your test budget should be at least $7,500 ($30 CPA x 50 conversions x 5 ads).

Following this formula makes sure you don't cut the test short and end up making calls based on a handful of lucky clicks.

Can I Really Trust AI-Generated Creative Ideas?

I get it. It feels a little weird to trust an algorithm with brand voice and customer psychology. But you have to think about AI's role differently. It isn't here to take over your job as a strategist; it’s here to make you better at it.

Picture AI as the world's fastest brainstorming partner. It can spit out hundreds of hooks, headlines, and visual concepts in the time it takes you to grab a coffee. Your role then becomes that of a creative director—curating the gold from the AI's output and polishing it to perfectly match your brand.

The teams killing it with AI aren't just copy-pasting what the machine gives them. They use it to generate a massive pool of ideas, then apply their human expertise to pick and refine the ones with real strategic punch. It’s a collaboration, not a replacement.

Ultimately, the data will do the convincing. The first time you see an AI-suggested hook crush an angle your team spent hours on, you'll see how it uncovers patterns you might have otherwise missed.

How Do I Avoid Wasting Money on Losing Ads?

This worry comes from the old way of doing things, where launching a new ad was a huge, time-consuming bet. With rapid AI testing, you need to flip that mindset. "Losing" ads aren't a waste of money anymore—they're cheap data.

Every single variation that bombs is teaching you something critical about what your audience doesn't respond to. That process of elimination is incredibly powerful. It helps you zero in on what works so much faster, making all your future creative work smarter and more effective from the get-go.

Plus, if you're using tools like Campaign Budget Optimization (CBO) on platforms like Meta, the algorithm does a lot of the work for you. It quickly spots the duds and automatically pushes more budget to the ads that are getting traction. It’s a built-in safety net that lets every creative get a fair shot without you having to burn through cash. This is a core part of testing at scale—let the machine handle the optimization.

Ready to stop guessing and start knowing what creative drives results? With ShortGenius, you can generate, test, and scale high-performing ads faster than ever before. Get started for free today!