The Best Way to Test Multiple Ad Creatives With AI

Discover the best way to test multiple ad creatives with AI. This guide reveals a practical workflow for creating, testing, and scaling ads to maximize ROI.

If you want to test a ton of ad creatives effectively, the answer is to stop thinking like a traditional A/B tester. The old way is too slow and manual. The real key is moving to a high-volume, automated system where AI does the heavy lifting—from brainstorming ideas and creating variations all the way to analyzing the results.

This isn't just about finding one "winning ad." It's about building a system to discover precisely which parts of your ads resonate with your audience, so you can win consistently.

Moving Beyond Guesswork in Ad Creative Testing

Let's be honest. If you're still painstakingly setting up one-on-one A/B tests to compare two ad variations, you're playing a different game entirely. That old-school approach is slow, incredibly limited, and often relies more on a gut feeling than hard data. Sure, you might find a headline that’s marginally better, but you’re missing the forest for the trees.

The modern way to test completely flips this on its head. Instead of asking, "Does Ad A beat Ad B?" we’re asking, "Which specific elements—the hook, the headline, the visual, the CTA—are actually driving conversions?" This is where AI becomes a performance marketer's best friend.

The New AI-Driven Workflow

What we're talking about here is a workflow that systematizes creativity. Modern AI tools can spit out dozens of compelling headlines, script ideas, and visual concepts in the time it takes to grab a coffee. This lets you build a massive library of creative components to mix and match in your tests.

This isn't just a theoretical improvement; it has a real impact on the bottom line. Recent data shows that AI-optimized ad creatives have been known to double click-through rates (CTR) compared to manually designed ads. This happens because AI lets you generate and test countless variations at a speed a human team could never match. You can dig into the numbers in this report on AI-generated ad creative performance statistics.

The goal is no longer to find a single winning ad. It’s to build a playbook of winning components that you can recombine and deploy across campaigns for consistent performance. This is how you create a sustainable competitive advantage.

When you shift from a simple one-vs-one comparison to a many-vs-many analysis, you uncover much deeper insights. You don't just learn that a video ad did well. You learn that a specific three-second hook, paired with a benefit-focused headline and a direct CTA, is your golden formula.

To really drive this home, let’s look at how the old and new methods stack up.

Traditional A/B Testing vs AI-Powered Creative Testing

The table below breaks down the fundamental differences between the slow, manual process many are still stuck in and the fast, scalable approach that top performers are adopting.

| Aspect | Traditional A/B Testing | AI-Powered Creative Testing |

|---|---|---|

| Scale | Tests 2-4 ad variants | Tests hundreds or thousands of combinations |

| Speed | Weeks to get conclusive results | Days to identify winning elements |

| Insights | Identifies the "best" ad overall | Reveals the best headlines, visuals, and CTAs |

| Process | Manual setup, launch, and analysis | Automated generation, organization, and analysis |

As you can see, it’s not just an upgrade—it's a complete change in strategy. One is about picking a winner from a small lineup, while the other is about building an entire roster of all-stars.

Setting the Stage for Smart AI Ad Testing

It’s tempting to dive headfirst into AI tools, but that's a surefire way to burn through your ad budget with nothing to show for it. The smartest way to test a ton of ad creatives with AI always starts with a solid, human-led strategy. Before you ask an AI to generate a single headline or image, you need to be crystal clear on what success actually looks like.

Are you trying to drive your Cost Per Acquisition (CPA) down, or are you focused on hitting a higher Return On Ad Spend (ROAS)? They might sound similar, but these are very different goals that will change how you build and test everything. A campaign built to get cheap leads looks completely different from one designed to land high-value customers.

This is the part where you decide which Key Performance Indicators (KPIs) really matter. It's easy to get sidetracked by vanity metrics like impressions or even high click-through rates, but you have to focus on the numbers that actually impact your business.

Pinpointing Your Core Metrics and Campaign Goals

Your main goal should be a single, measurable outcome. For an e-commerce brand, that might be hitting a 4x ROAS. For a SaaS company, the target could be locking in a $50 CPA for every new demo sign-up.

With your primary goal locked in, you can identify the secondary metrics that tell you if you’re moving in the right direction.

- Conversion Rate (CVR): What percentage of people who click actually take the action you want?

- Cost Per Click (CPC): How efficient are your ads at simply getting people to your site?

- Average Order Value (AOV): This one's crucial for understanding if you're attracting big spenders or bargain hunters.

Deciding on these metrics now keeps you from getting lost in a sea of data later on. It gives your AI-powered tests a clear target, ensuring the algorithm is optimizing for what truly grows your bottom line.

Breaking Down Your Ads into Testable Pieces

To get the most out of AI, you have to stop thinking of an ad as one single thing. Instead, break it down into its core building blocks—I like to call them "atomic components." This is the real secret to generating and testing thousands of effective combinations at scale.

Think of each component as a variable the AI can play with.

- The Hook: The first 1-3 seconds of your video or the boldest part of your image.

- The Headline: The main text that does the heavy lifting to grab attention.

- Body Copy: The text that fills in the details and persuades the reader.

- The Visual: The image, video clip, or piece of user-generated content.

- The Call-to-Action (CTA): The button or phrase that tells people exactly what to do next.

When you isolate these elements, you can give the AI specific instructions to create variations for each one. This lets you test real hypotheses, like, "Does a question-based headline pull better than a bold statement?" or "Does a product close-up outperform a lifestyle shot?" You're essentially creating a structured playground for high-volume testing. For example, you could see how AI helps generate compelling UGC ads by deconstructing authentic user videos into dozens of testable hooks and scenes.

Matching Creative Angles to Audience Segments

The last piece of the puzzle is smart audience segmentation. Forget about just targeting broad demographics like age and location. The real magic happens when you align specific creative angles with people's behaviors or mindsets.

Think about the different reasons people might buy from you.

- New Prospects: These people have no idea who you are. They'll likely respond best to ads that introduce their problem and frame your product as the perfect solution.

- Cart Abandoners: They were this close to buying. They just need a gentle reminder, maybe an ad with a great review or a small discount to get them over the line.

- Loyal Customers: They already love you. You can hit them with ads showcasing new products, loyalty perks, or exclusive offers.

By building out these distinct audience segments, you can direct your AI to generate creative that speaks directly to what each group cares about. An ad that crushes it with a cold audience will almost certainly fall flat with your loyal customers, and vice-versa.

Getting this strategic groundwork right is what turns AI from a simple content-spinner into a true optimization engine. With clear goals, broken-down components, and smart segments, you're ready to run tests that deliver clear, actionable, and profitable results.

Generating and Managing Ad Variants at Scale

Once your strategy is locked in, it’s time for the fun part: using AI to churn out the raw creative material for your experiments. This is where you move from painstakingly crafting a handful of ad options to generating a massive library of high-quality components almost instantly.

Think about it. A few years ago, coming up with 50 different headlines for one product feature would have eaten up half a day in a brainstorming session with your whole team. Now, an AI tool can get it done in about five minutes. That’s the kind of scale we’re talking about.

Fueling Your Tests with AI-Generated Creatives

The goal here isn't just to make more stuff; it's about creating structured variation. You're building a diverse portfolio of testable elements, not just a pile of ads. AI is brilliant at this because it can explore different emotional angles, tones, and styles for the same core message.

-

For copywriting, tools like Jasper or Copy.ai can take a single product benefit and spin it into dozens of unique headlines and ad copy versions. You can prompt them to write in an urgent tone, a humorous one, or an empathetic one to see what truly resonates. For a more integrated approach, you can even explore an AI ad generator that handles the whole process from initial concept to the final creative.

-

For visuals, the possibilities are staggering. Platforms like Midjourney or DALL-E 3 can produce an incredible range of image concepts from a simple text prompt. Need a photorealistic shot of your product on a mountaintop? An animated character? An abstract graphic that captures a feeling? You can test visual themes at a speed and cost that was simply impossible before.

Even the big ad platforms are baking these capabilities right in. Meta's Advantage+ Creative, for example, can automatically tweak your ads by applying visual filters, testing different aspect ratios, or even adding music to still images. These native tools are built to work with the platform's algorithms, which can give your AI-assisted creative a nice performance boost.

The Creative Matrix: Your Secret to Staying Organized

Unleashing an AI to generate hundreds of creative assets is exciting, but it can turn into absolute chaos without a system. If you can't remember which headline was paired with which image and call-to-action, your test data is worthless. This is why you need a Creative Matrix.

It sounds fancy, but it’s really just a simple spreadsheet that acts as your central command center. It systematically maps out every single combination of creative elements you plan to test and gives each unique variant a clear identifier.

A Creative Matrix is the bridge between AI-powered generation and structured, scientific testing. It turns a mountain of creative assets into an organized, analyzable experiment, preventing you from getting lost in your own data.

By setting this up before you launch, you ensure that every ad's performance can be tracked precisely. When the results roll in, you’ll be able to easily trace that amazing conversion rate back to the exact combination of Headline V4, Image V2, and CTA V1.

Building Your Own Creative Matrix

You don't need a complex piece of software for this. A simple Google Sheet or Excel file works perfectly. The key is to be methodical. You'll create columns for each ad component (headline, image, CTA, etc.) and rows for every unique combination.

Here’s a simplified template for how to organize your ad components for a multivariate test.

Sample AI Creative Variant Matrix

| Ad ID | Audience Segment | Headline Variant | Image Variant | CTA Variant |

|---|---|---|---|---|

| RUN-001 | New Prospects | H1: "Run Faster, Hurt Less" | IMG1: Product close-up | CTA1: "Shop Now" |

| RUN-002 | New Prospects | H2: "Meet Your New PR" | IMG1: Product close-up | CTA1: "Shop Now" |

| RUN-003 | New Prospects | H1: "Run Faster, Hurt Less" | IMG2: Lifestyle action shot | CTA1: "Shop Now" |

| RUN-004 | New Prospects | H2: "Meet Your New PR" | IMG2: Lifestyle action shot | CTA2: "Learn More" |

| RUN-005 | Cart Abandoners | H3: "Still Thinking About It?" | IMG3: Customer review | CTA1: "Shop Now" |

| RUN-006 | Cart Abandoners | H4: "Free Shipping Ends Soon" | IMG3: Customer review | CTA3: "Complete Order" |

This system gives you total clarity. The Ad ID becomes your naming convention inside the ad platform, which makes connecting the performance data back to your matrix a breeze.

This disciplined approach is non-negotiable. It’s what channels the massive creative output from AI into a structured, learnable experiment. Without it, you’re just making more noise. With it, you're building a machine for discovering exactly what makes people click.

Running Smarter Ad Experiments with Automation

So you've used AI to spin up a massive library of creative assets. Now what? The next move is designing an experiment that actually tells you something useful. This is a common stumbling block for marketers—we either run tests so simple they don’t yield any deep insights or we make them so complex they’re impossible to manage.

The secret is picking the right testing method for your goals and then letting automation do the heavy lifting. The old way of just doing basic A/B tests isn't going to cut it when you're working with dozens or even hundreds of AI-generated components.

Choosing the Right Testing Framework

You’ve really got two main options for structured testing: A/B testing and multivariate testing. An A/B test is as straightforward as it gets. You pit one completely different ad against another to see which one performs better. It’s perfect for testing big, bold changes, like a video ad versus a static image.

Multivariate testing, on the other hand, is where AI's power to generate variants really comes alive. Instead of testing two completely different ads, you’re testing multiple components all at once—think five headlines, four images, and three calls-to-action. The ad platform then mixes and matches all these elements on the fly to pinpoint the single most effective combination.

To get the most out of your experiments, you need to know when to use which method. For a deeper dive into the specifics, checking out a guide on multivariate vs. A/B testing can help clarify when a simple showdown is enough versus when a more complex test will give you richer data.

Pro Tip: Here’s how I approach it. Start with A/B tests to validate high-level strategy (like a "pain point" angle versus a "benefit" angle). Once you find a winning strategy, switch to multivariate tests to fine-tune and optimize the individual components within that winning concept.

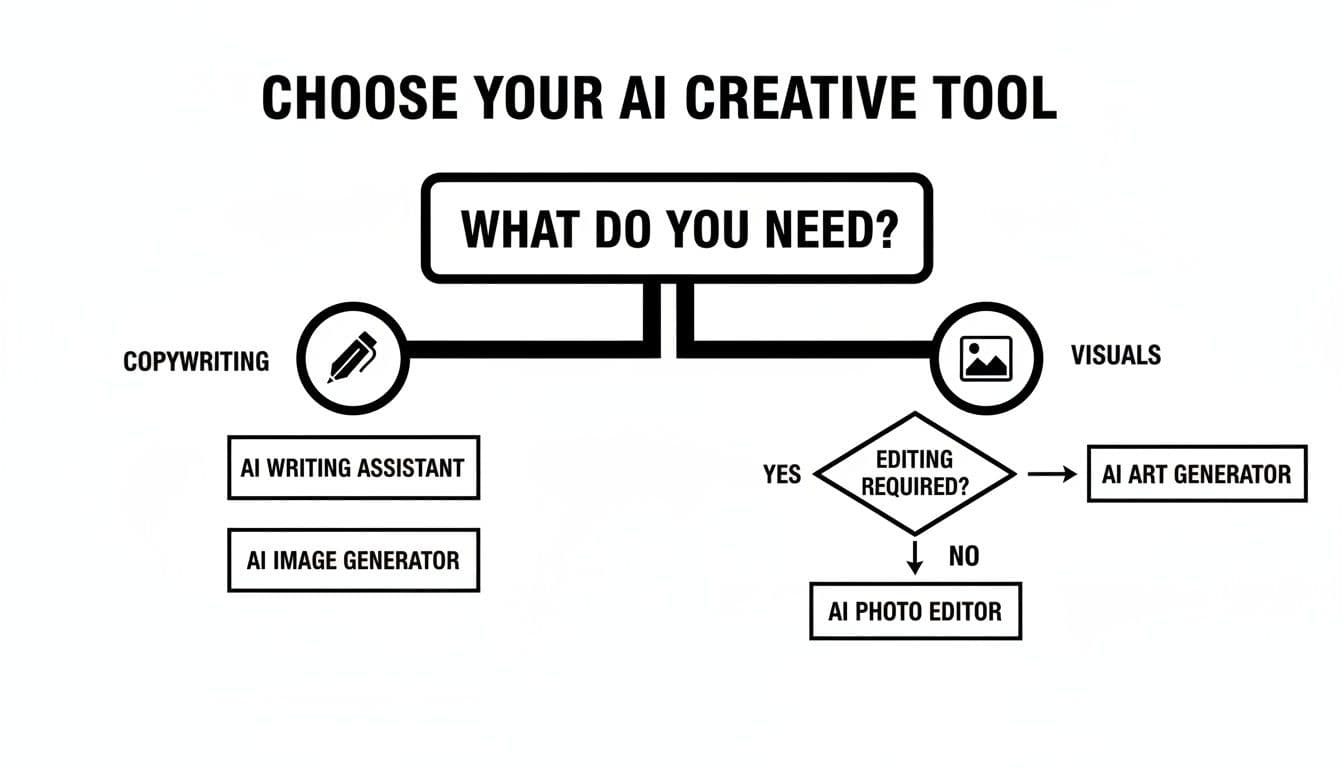

This decision tree is a great mental model for quickly figuring out which type of AI tool you need based on your immediate bottleneck, whether that's copywriting or generating visuals.

Embracing Adaptive Testing and Automation

Beyond those structured tests, today's ad platforms like Meta and Google offer something even better: adaptive testing. Often powered by multi-armed bandit algorithms, this approach doesn't wait for a test to finish. Instead, the algorithm intelligently shifts your budget to the winning creative variants in real-time. This is huge because it cuts down on wasted ad spend and gets you to your best-performing creative much faster.

Take Meta’s built-in creative testing feature. It lets you test a bunch of creatives inside a single ad set, which guarantees a fair budget split and, crucially, prevents audience overlap. This gives you a much cleaner, more reliable testing environment than trying to kludge it together manually.

To really put this on autopilot, you can lean on automation rules. These are basically simple "if-then" commands you can set up right inside the ad platforms.

- Rule Example 1: If an ad's Cost Per Acquisition (CPA) is 20% higher than the ad set average after spending $50, automatically pause the ad.

- Rule Example 2: If an ad's Click-Through Rate (CTR) dips below 0.5% after 10,000 impressions, send me a notification to take a look.

These rules create a self-managing system. You define the strategic guardrails, and the platform’s automation takes care of the tedious, minute-by-minute adjustments. This frees you up to focus on what matters: analyzing the results and brainstorming the next wave of experiments.

When you need to feed this testing machine with a high volume of visual assets, the right tool is a game-changer. For instance, a platform like https://shortgenius.com can help you churn out numerous video ad variations from a single idea, giving your automated tests a constant stream of fresh creative to work with.

By pairing a smart testing framework with the automation features already built into ad platforms, you're not just running a campaign—you're building a powerful, always-on learning system.

Analyzing Results to Find and Scale Winners

Running a bunch of AI-powered creative tests is the easy part. The real work begins when you have to make sense of the data. All those numbers are just noise until you turn them into insights you can actually use to grow your business. This is where you transform a dashboard into a winning strategy.

Too many marketers get hung up on surface-level metrics like Click-Through Rate (CTR) or Cost Per Click (CPC). Sure, they're good for a quick health check, but they rarely tell the whole story. A killer CTR doesn't mean much if none of those clicks are turning into sales or sign-ups.

To find out what’s really working, you have to connect ad performance to your bottom line. That means focusing on metrics like Conversion Rate (CVR), Customer Lifetime Value (LTV), and, of course, Return On Ad Spend (ROAS).

Look for Winning Elements, Not Just Winning Ads

Here's the most common mistake I see people make: they find one "winner" ad and then just try to clone it. A much smarter approach, especially when you're using AI to test at scale, is to break down your results to find the winning elements.

Go back to that Creative Matrix you built earlier. The goal now is to slice and dice the performance data for each individual component to spot the patterns.

- Headlines: Do headlines framed as a question consistently get more engagement than bold statements?

- Visuals: Are lifestyle shots with people driving a higher CVR than your clean, product-on-white backgrounds?

- Hooks: For video, does a punchy, three-second hook lead to a lower drop-off rate compared to a slower, more cinematic intro?

When you analyze each component this way, you're doing more than just finding a single good ad. You're building a playbook of proven ingredients that you can mix and match in future campaigns. That's how you get consistent results instead of just hoping for a one-off viral hit.

The goal isn't just to find the best ad from this one test. It's to learn that your audience responds best to user-generated content paired with benefit-driven headlines—a formula you can now apply to every future campaign.

Connecting Creative Data to Business Outcomes

Once you’ve pinpointed your high-performing creative elements, the next step is to make sure they're actually driving profitable growth. This means looking beyond the ad platform's dashboard and tying your test results back to your business's core financial data.

For instance, you might find one creative generates leads at a 20% lower CPA. That looks great on the surface. But when you dig into your CRM, you might discover those "cheaper" leads have a terrible conversion rate and a low LTV. Meanwhile, another creative with a slightly higher CPA might be attracting customers who spend more and stick around for years.

The impact of these creative choices can be huge. For example, top e-commerce brands are finding that seemingly small tweaks make a massive difference, and this guide on how AI-generated visuals can improve conversion rates shows just how powerful these visuals can be.

A Smarter Way to Scale Your Winners

So, you've found a winning formula. The temptation is to just crank up the budget and watch the sales roll in. But that's often a recipe for disaster. It can lead to rapid ad fatigue, and you'll watch your performance tank as your audience gets sick of seeing the same thing.

Here’s a more strategic way to scale.

- Isolate and Reiterate: Take the winning components—like your top headline style and visual format—and use your AI tool to generate a fresh batch of variations built on that successful formula. This gives you new ads that feel different but are grounded in what you know works.

- Expand to New Audiences: Move your winning creative formulas from your small testing campaign into your main prospecting campaigns. Start showing them to broader lookalike audiences or new interest groups to see if the magic holds up.

- Increase Budgets Slowly: When you do raise the spend, don't shock the system. A sudden, massive budget increase can throw the ad platform's algorithm for a loop and reset its learning phase. Stick to gradual increases of no more than 20-25% every few days to keep performance stable.

This methodical process—analyzing, iterating, and scaling—turns creative testing from a one-time project into a continuous optimization engine that fuels real, sustainable growth.

Got Questions About AI Ad Creative Testing?

Stepping into an AI-driven testing workflow is a big move, and it's totally normal for a few questions to pop up. Let's tackle some of the most common ones I hear from marketers so you can move forward with confidence.

How Much Budget Do I Really Need to Start?

There's no magic number here, but I always tell people to aim for enough budget to get at least 100 conversions per creative variant. That's the threshold where you can start feeling confident your results aren't just a fluke.

For a platform like Meta, a good starting point for a dedicated testing campaign is often $50 to $100 a day. The goal here isn't immediate ROAS—it's learning velocity. You're spending a small, controlled amount to quickly figure out what works.

I find it helpful to think of it as two separate buckets: a smaller "testing budget" for discovery and a much larger "scaling budget" for your proven winners. The beauty of AI is how it makes your testing budget work harder, automatically shifting spend away from the duds to minimize waste.

Is AI Going to Replace My Creative Team?

Not a chance. Think of AI as a powerful creative partner, not a replacement. The best results always come from a smart division of labor between human insight and machine execution.

Your team is still the source of the strategic "big idea." They understand the market, the brand's voice, and the emotional core of a campaign. The creative director still sets the destination.

AI is the super-efficient fleet commander that gets you there. It can take a single human-conceived concept and spin it into hundreds of variations, exploring every possible angle at a scale no team could ever match.

AI can’t create a brand's soul, but it's brilliant at finding the most resonant way to express it. That human-machine collaboration is where the magic happens.

What are the Biggest Mistakes People Make with Creative Testing?

Even with the best tools, it's surprisingly easy to fall into a few common traps that can completely derail your tests. Knowing what they are is half the battle.

Here are the top three I see all the time:

- Testing too many things at once. Just throwing a dozen different headlines, images, and CTAs into the ring without a plan is a recipe for confusion. You’ll have no idea what actually caused the lift. This is exactly why a structured creative matrix is non-negotiable.

- Calling it quits too early. I know it's tempting, but making a decision after just a day or two of data is a classic mistake. You need to let tests run long enough to get enough data and ride out the natural daily ups and downs.

- Obsessing over surface-level metrics. A sky-high click-through rate (CTR) feels great, but it's a vanity metric if those clicks don't turn into customers. Always, always analyze the full funnel to see the real business impact.

How Do I Pick the Right AI Tool?

The "best" tool is the one that solves your biggest bottleneck right now. Don't get caught up searching for one perfect tool that does everything. Instead, find the one that plugs your most immediate gap.

Start by being honest about where your team struggles most.

- Stuck on copywriting? A tool like Jasper or Copy.ai can be a game-changer for generating endless headlines and ad copy.

- Need more visuals? Midjourney or DALL-E 3 are incredible for producing unique, high-quality images from simple text prompts.

- Overwhelmed by the whole process? Platforms like AdCreative.ai or Pencil offer more end-to-end solutions that help with both generation and campaign management.

The smart move? Most of these platforms offer free trials. Pick one or two that target your biggest pain point, see how they feel in your actual workflow, and only commit once you've seen a real impact.

Ready to stop guessing and start generating ads that win? With ShortGenius, you can produce high-performing video and image ads for all major platforms in seconds. Go from idea to a full campaign of testable creative variations without needing a big production team. Start creating with ShortGenius today.